Remaking this blog for fun and learning

I wrote about setting up this blog a couple of years ago. It was simple - I would git push a new post to my rented server, where the hugo static site generator would convert it to .html files served by nginx. This worked well, I had no complaints.

Except. Dark mode and syntax highlighting (for programming snippets) would be nice. I use dark mode everywhere and it irked me that my own site didn't support it. I could have modified the blog's existing theme to add these features, but where's the fun in that? Also, I wanted private drafts because it's important to my writing flow.

I started with this template and modified it till it looked like this. Along the way I added dark mode, syntax highlighting and a few features I had on the previous website - RSS subscriptions, tags and time to read a post. I would have added private drafts, but this was solved by changing how I deployed the website. I moved from hosting myself to Cloudflare Pages, which gave me private drafts for free.

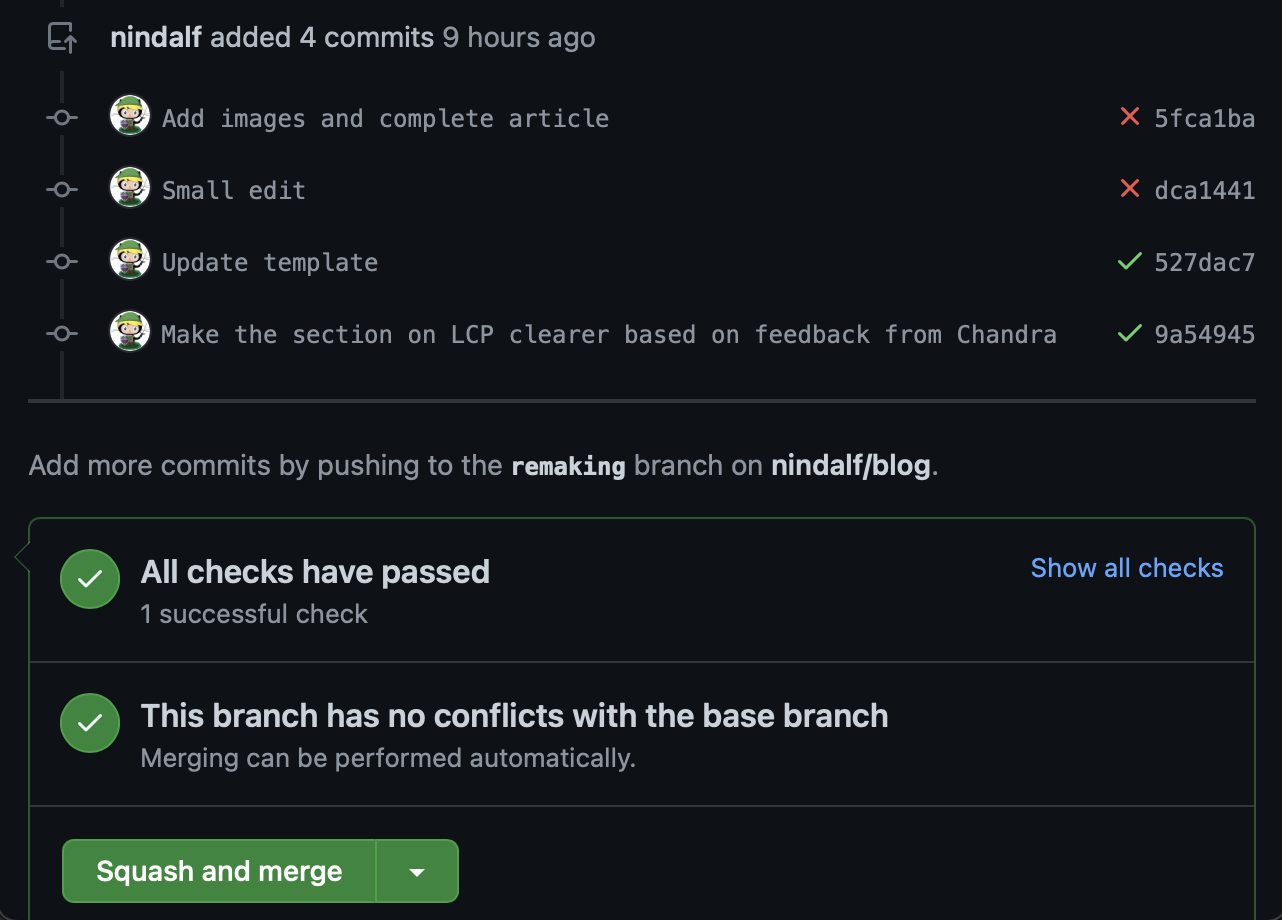

This is my current flow

- Write a post in markdown.

git commitandgh pr create.- Cloudflare's bot comments on the Pull Request (PR) with a link to a private draft.

- I share the link with people, get feedback and update the PR.

- When it's done, merge the PR. It appears on

blog.nindalf.comautomatically.

If this flow appeals to you, you can check out the template and modify it to suit your needs.

Things I learned along the way

I've been working in a large tech company in the last few years. Most of the infra and tools are built within. I admit, I haven't kept up with all the developments in dev infra in the outside world. While many of these things might be obvious to you, it wasn't to me and I enjoyed learning about them.

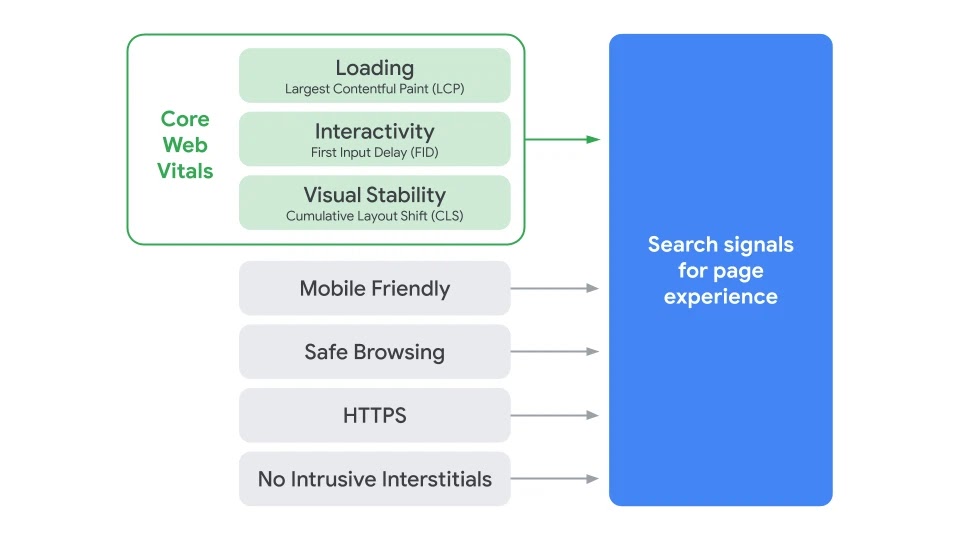

Core Web Vitals

Apart from latency, it's not easy to agree on what metrics measure web performance well. In May 2020, the Chrome team announced Core Web Vitals a set of metrics and tools that would do that. Later that month, the Google Search team announced that "page experience" would be measured by these metrics. Sites with good page experience would benefit from improved ranking. That's a good enough reason for most web developers to prioritize this. Past google initiatives, like rewarding the adoption of HTTPS contributed to HTTPS becoming nearly ubiquitous

It's 3 metrics for now, but will change in future.

- Largest Contentful Paint measures perceived load speed and marks the point in the page load timeline when the page's main content has likely loaded.

- First Input Delay measures responsiveness and quantifies the experience users feel when trying to first interact with the page.

- Cumulative Layout Shift measures visual stability and quantifies the amount of unexpected layout shift of visible page content.

Here was my experience - I found variance while measuring these. Often I'd re-run it on the same website within seconds of the previous run and get different results. The variance was higher on Google's PageSpeed Insights page compared to Chrome DevTools. This is unsurprising because these metrics are dependent on both the client's network and the client's hardware. Their Insights page probably runs on shared hardware, accounting for that variance.

I figure the right way to track these metrics is using DevTools during development to get a rough idea. I would add them to CI but only to measure large regressions. If the CI is running on shared hardware, these metrics will be noisy. Lastly, I would log these metrics client side from users. This helps us track the experience of actual users, accounting for real world network and devices. It also averages out the noise that we might see if we only took a few ad-hoc measurements on dev machines.

DevTools also tracks 3 other areas (Accessibility, Best Practices, SEO) in addition to Performance, with helpful suggestions for each. For example, I wasn't setting the lang attribute on the topmost <html> tag. This matters to folks with screenreaders. The new blog does better than the previous one, scoring 100 in all areas.

Impact of CDN

It's obvious that CDNs reduce the latency of serving static resources. I was still impressed by the level of improvement, as well as how easy it was to enable the CDN. I measured the min, mean, p50, p90 and p95 responses for the old and new blogs, on CDN and off.

As expected, both blogs on CDN remained low latency from all locations I tested - Oregon, London, Singapore, Sydney. The latency for the non-CDN sites increased with distance from Bangalore, the location of the server. Takeaway - you could get away without a CDN if your users are mostly in one region. But honestly, why would you?

Edge computing

I was aware this existed, but until I tried it I had no idea how easy it was to develop and deploy. It took me only a few minutes to deploy a simple worker that would send a customised robots.txt depending on the Hostname. Delving into this further, I found the pricing is so competitive that I'd have to seriously consider it while architecting for bursty loads.

I find that AWS Lambda and it's competitors are priced well too. I tried Lambda for a bit but the dev experience wasn't as sharp.

RSS

There wasn't much to learn here. Turns out the format for RSS is straightforward. It's simple to generate the XML through string concatenation and write it to the appropriate location - root/index.xml.

CSS

As a backend developer, I'm a bit like Squidward here. But creating the blog from scratch meant I couldn't avoid messing around with CSS. It was a good opportunity to flex rarely used muscles and to learn about (relatively) new approaches to writing CSS like Tailwind.

Keep learning

I learned so much from just a few hours of making something simple. I hope this post is a reminder to myself to keep investing this time now and then.